#Define and process the input sequence encoder_inputs = Input(shape=( None, num_encoder_tokens)) encoder = LSTM(latent_dim, return_state= True ) encoder_outputs, state_h, state_c = encoder(encoder_inputs) #We discard `encoder_outputs` and only keep the states. After that, an initial state will be set up for the decoder using ‘encoder_states’. īelow lines of codes will define the input sequence for the encoder defined above and process this sequence. for t, char in enumerate(target_text): decoder_in_data] = 1. #Define data for encoder and decoder input_token_id = dict () target_token_id = dict () encoder_in_data = np.zeros((len(input_texts), max_encoder_seq_length, num_encoder_tokens), dtype= 'float32' ) decoder_in_data = np.zeros((len(input_texts), max_decoder_seq_length, num_decoder_tokens), dtype= 'float32' ) decoder_target_data = np.zeros((len(input_texts), max_decoder_seq_length, num_decoder_tokens), dtype= 'float32' ) for i, (input_text, target_text) in enumerate(zip(input_texts, target_texts)): for t, char in enumerate(input_text): encoder_in_data] = 1. input_texts = target_texts = input_chars = set () target_chars = set () with open ( 'fra.txt', 'r', encoding= 'utf-8' ) as f: lines = f.read().split( '\n' ) for line in lines: input_text, target_text = line.split( '\t' ) target_text = '\t' + target_text + '\n' input_texts.append(input_text) target_texts.append(target_text) for char in input_text: if char not in input_chars: input_chars.add(char) for char in target_text: if char not in target_chars: target_chars.add(char) input_chars = sorted ( list (input_chars)) target_chars = sorted ( list (target_chars)) num_encoder_tokens = len (input_chars) num_decoder_tokens = len (target_chars) max_encoder_seq_length = max () max_decoder_seq_length = max () #Print size print ( 'Number of samples:', len (input_texts)) print ( 'Number of unique input tokens:', num_encoder_tokens) print ( 'Number of unique output tokens:', num_decoder_tokens) print ( 'Max sequence length for inputs:', max_encoder_seq_length) print ( 'Max sequence length for outputs:', max_decoder_seq_length)Īfter getting the data set with all features, we will define the input data encoder and decoder and the target data for the decoder.

In the vectorization process, the collection of text documents is converted into feature vectors. The below lines of codes will perform the data vectorization where we will read the file containing the English and corresponding French sentences. #Hyperparameters batch_size = 64 latent_dim = 256 num_samples = 10000 #Importing library import numpy as np from keras.models import Model from keras.layers import Input, LSTM, Dense from keras.utils import * from keras.initializers import * import tensorflow as tf import time, randomĪfter importing the libraries, we will specify the value for the hyperparameters including the batch size for training, latent dimensionality for the encoding space and the number of samples to train on.

#Sequential model lstm install#

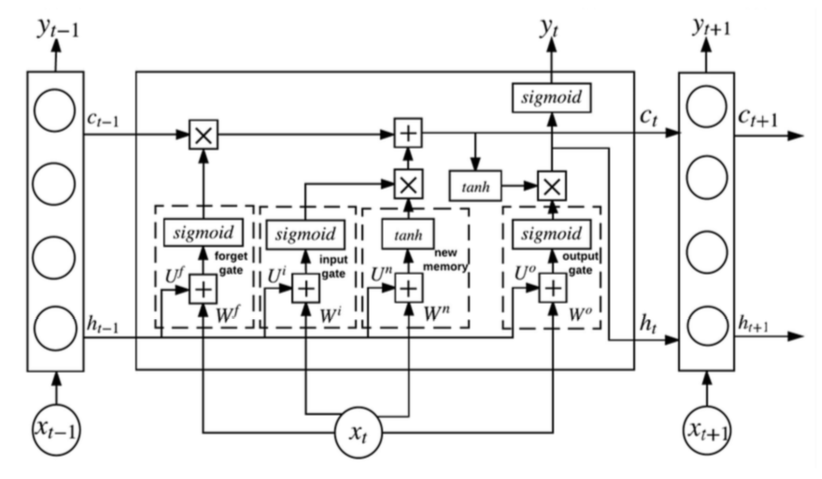

If you are working on your local system, make sure to install TensorFlow before executing this program. This program was executed in Google Colab with hosted runtime. Implementation of Sequence-to-Sequence (Seq2Seq) Modellingįirst of all, we will import the required libraries. This dataset contains pairs of French sentences as well as their English translation. In this experiment, the data set is taken from Kaggle that is publically available as French-English Bilingual Pairs. The LSTM encoder and decoder are used to process the sequence to sequence modelling in this task. This approach will be applied to convert the short English sentences into the corresponding French sentences. In this article, we will implement deep learning in the Sequence-to-Sequence (Seq2Seq) modelling for language translation.

0 kommentar(er)

0 kommentar(er)